The Reliability Problem with AI Agents

Imagine a customer calls a health-insurance hotline and asks about their coverage. The caller is at a critical juncture: before the agent can discuss any personal health information (PHI), it must verify identity. That’s non-negotiable under HIPAA. But here’s the problem: you give a large language model (LLM) a massive prompt that includes “first verify identity, only then access patient records, then answer the question”. Despite that instruction, the model sometimes jumps ahead, indulges in friendly chat before verification, or pulls up PHI before asking identity questions.

Why? Because the flexibility that makes LLMs powerful works against you when you need strict procedural ordering. One big prompt is just a suggestion to the model — there’s no enforced mechanism to guarantee the identity check happens first every time.

A human agent, by contrast, instinctively knows the workflow: first, they switch into “verification mode” (“May I confirm your identity?”), Then once cleared, they transition into “helping mode” (“Great — here’s what I found for you”). Humans maintain the boundary. A flat, monolithic prompt-driven agent struggles with those stage transitions.

At Observe.AI, we encountered this exact issue. A single large prompt agent simply couldn’t deliver enterprise-grade consistency. The following section walks through the architecture evolution that solved it.

Understanding Agent Architectures

First, let’s define “AI agent” in our context. It’s an LLM-driven entity that combines:

- A structured prompt (description of role, guardrails, personality)

- Context (conversation history, state)

- External tools (APIs, knowledge bases, function calls)

It interacts through roles: system message (rules/context), user message (input), assistant message (agent output). That allows a multi-turn interaction in which the agent reasons, accesses tools, and takes action.

Architecturally, there are two broad approaches:

- Flat Agent: One large system prompt at the beginning. The agent has access to all tools, all instructions, from the get-go.

- Orchestrated Agent: A modular, graph-based design where behavior is broken into nodes (tasks) and transitions (edges) are explicitly defined.

Let’s compare.

The Flat Agent: Why One Big Prompt Doesn’t Scale

In a flat agent model, you might craft a 2,000-token prompt that says: “You are the assistant. First do X. Then if the user asks for Y, do this. If they ask for Z, use tool A. If at any point you encounter PHI, ask for identity first. You have tools A, B, C. Access tool A if you need to fetch …” and so on.

Sounds comprehensive. But in practice, we found critical limitations:

- Prompt bloat: When everything is jammed into a single system message, the LLM’s compliance degrades. The sheer volume of instructions burdens the model’s ability to keep track.

- No guaranteed ordering: You might write “first verify identity”, but the model can still call tool B before identity verification. The prompt cannot enforce strict “must do this first” logic.

- Conflicting roles: The same prompt may say: “During verification, you are strict; after verification, you are helpful.” But the model doesn’t reliably transition personality or tone at the right moment.

- Tool overload: Giving the agent access to 15+ tools from the start increases the chance it will pick the wrong tool or jump to a final step prematurely.

- Maintenance complexity: If something breaks, you must dig into a massive prompt to debug. There’s minimal modularity.

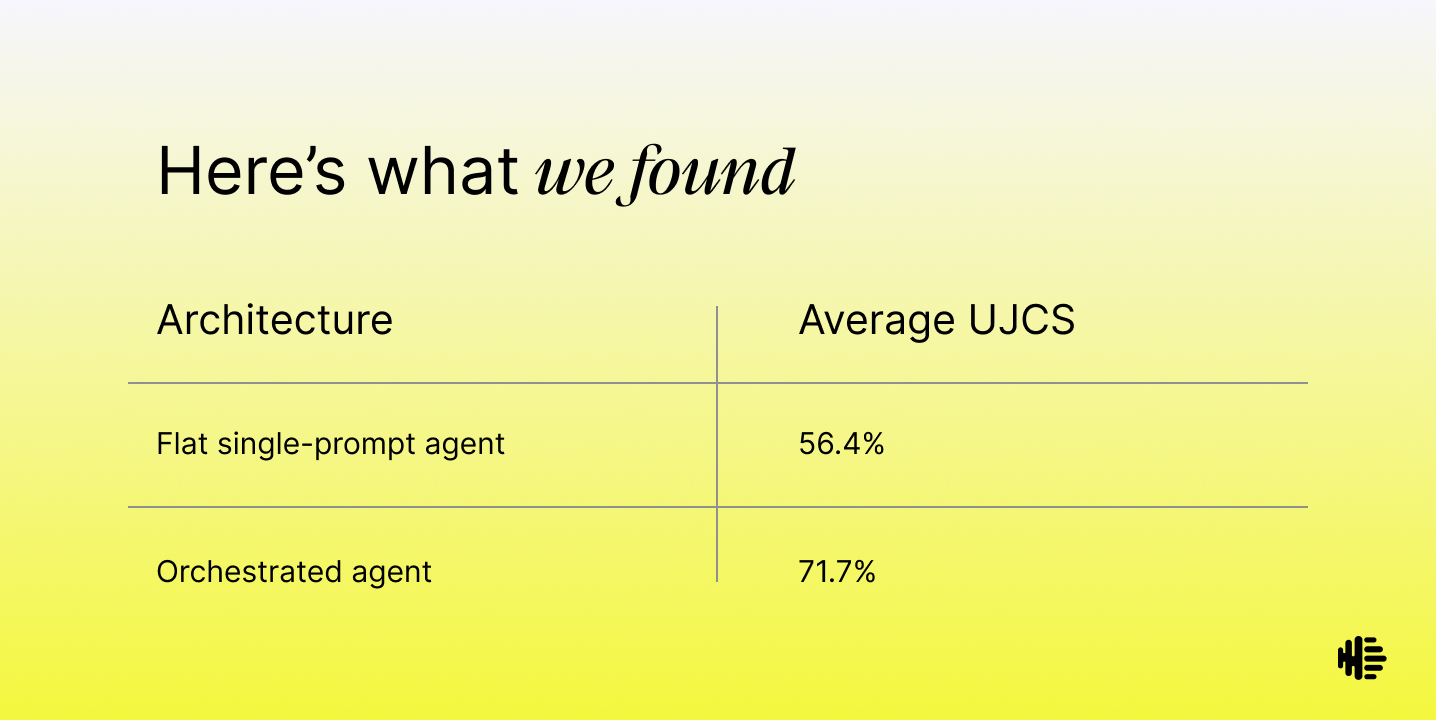

- Real-world fragility: In our internal study across 703 customer service conversations in three business domains (e-commerce, loans, telecommunications), flat agents achieved only ~56.4 % adherence to required business procedures (our metric: User Journey Coverage Score, or UJCS).

Note: We used high-quality, LLM-generated synthetic conversations to systematically test diverse scenarios, including edge cases. This data was validated against our production QA rubrics, achieving an 84.37% score comparable to real interactions.

The insight: treating the agent as a single giant function is like building a legacy monolith. It doesn’t scale. We needed modularity and structure.

Measuring What Matters: Our Internal Study

Before redesigning, we validated our concerns. We measured both flat and orchestrated architectures across 703 real conversations. Our key metric is User Journey Coverage Score (UJCS), currently 71.7%. This score measures if the agent executes the required procedures and passes the correct parameters across all scenarios. It's not just an ideal-path success rate; it comprehensively covers challenging failure modes like tool errors and incomplete user information. This 71.7% represents high coverage and provides a tangible metric to "hill climb" against.

Notably, a smaller, cheaper model combined with orchestration (e.g., GPT-4o-mini) achieved ~64.9% adherence, outperforming a larger model with a flat architecture.

Our biggest learning and key takeaway was: Architecture matters more than model size when reliability is the goal.

Agent Orchestration: Structure Meets Flexibility

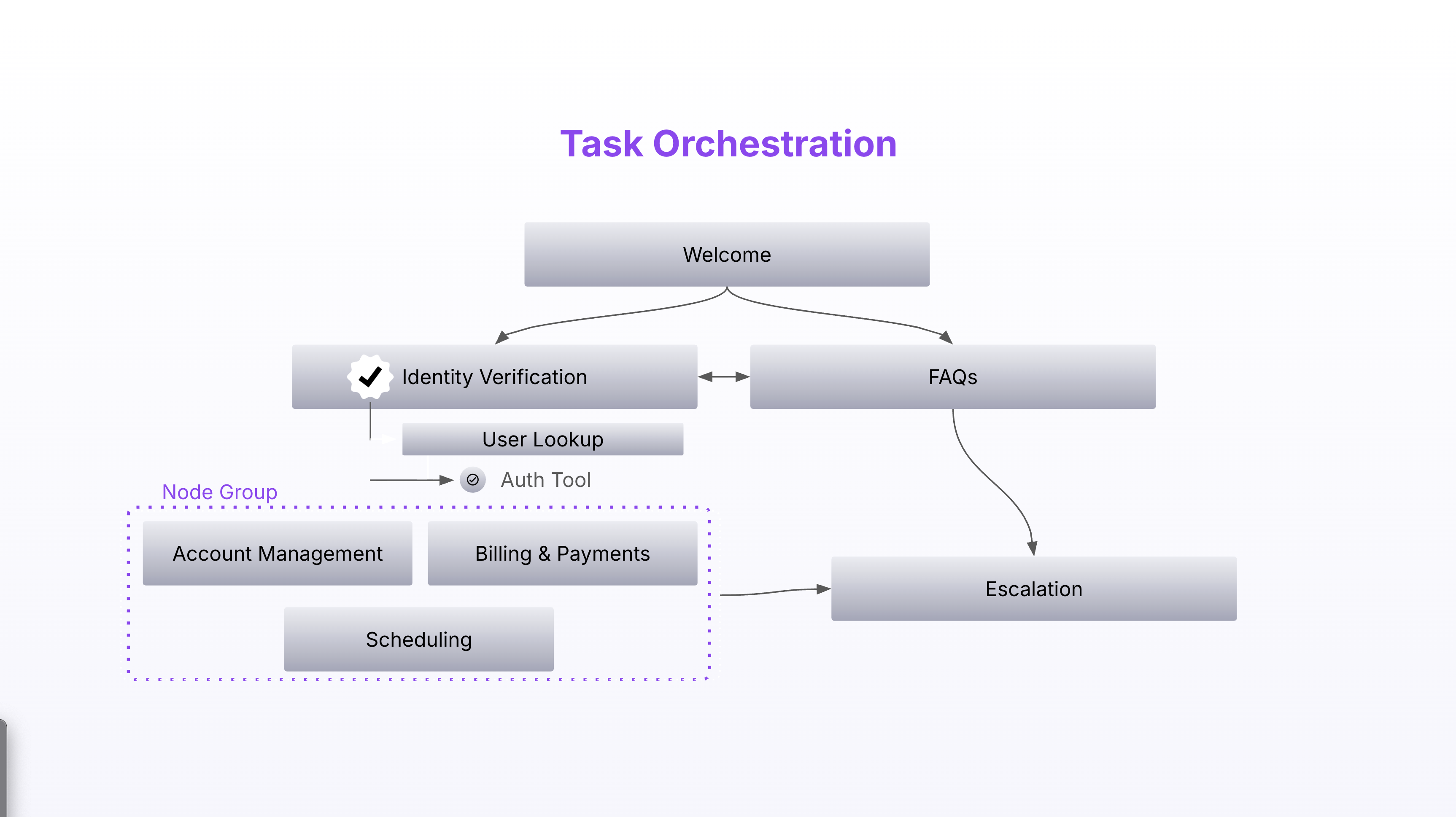

So what is “orchestration” in this context? It’s the approach of modelling the agent workflow as a graph: nodes correspond to discrete tasks (e.g., “verify identity”, “fetch coverage details”, “offer next steps”), while edges control allowed transitions (e.g., you cannot fetch coverage details until identity is verified). In other words: explicit control over sequencing.

This aligns with how many modern AI-agent frameworks define design patterns: you break down tasks, assign each piece to specialized agents or modules, and coordinate the transitions between them.

In practice at Observe.AI, our Agent Blueprint might look like:

- Node A: Identity Verification

- Note Group – only accessible with Identity Verification:

- Node B: Account Management

- Node C: Billing and Payments

- Node D: Scheduling

- Node E: FAQs

- Node F: Escalation or Human Hand-Off

Transitions from node to node are defined by requirements, such as A → Grouping (B to D) → F … or A → E if Identity Verification fails, with a safeguard for Escalation (if verification fails). Each node is a lean prompt controlling one task. Each node has only the tools necessary for that task. This approach ensures the conversation stays on track with the proper requirements while maintaining the natural language conversational flow of an AI Agent.

Why does this help? Because it delivers predictable workflows with auditability, modular upkeep, easier debugging, and safer compliance boundaries.

While orchestration introduces structure, it doesn’t make the system rigid or deterministic. Each node still uses reasoning within its boundaries, such as deciding how to phrase, interpret, or clarify, while staying on task. The result is a balance between creative flexibility in conversation and strict control over compliance and flow.

Structure Meets Flexibility

The core idea behind our Agent Blueprint orchestration framework is simple: structure the agent's behavior as a graph where each node represents a focused task, and transitions between nodes are explicitly controlled.

Instead of one 2,000-line function, you write 10 focused functions of 200 lines each. This framework is what allows us to define multiple "agentic behaviors" and control the transitions between them.

So what is a node (task)?

This is how we define the agent's multiple agentic behaviors. In our graph, each node defines a self-contained task with:

- Focused instructions: A specific system prompt for just this phase.

- Scoped tool access: Only the tools relevant to this task.

- Transition conditions: Explicit rules defining when and how to move to the next node.

Let's use a health insurance support example. Instead of one flat prompt, we have:

- Node 1 (Pre-Interaction): Gather call context metadata

- Node 2a (Verified Customer Path): If caller ID matches records, capture intent with full tool access

- Node 2b (Unverified Customer Path): If caller ID doesn't match, verify identity first

- Node 3 (Member Services - Benefits Inquiry): Handle medical coverage questions

- Node 4 (Member Services - Provider Lookup): Check network status

- Node 5 (Member Services - ID Card Management): Process ID card replacements

- Node 6 (Post-Interaction): Wrap up and log call

Dynamic System Prompt Injection: The Key Innovation

Here's where orchestration becomes powerful. As the agent transitions from one node to another, we dynamically replace the task-specific part of the system prompt. The agent's core "description, guardrails, and personality" remain, but its immediate task instructions and tools are swapped out. The conversation is maintained, but the agent’s capabilities and focus are transitioned, unnoticed by the caller, to unlock the next set of capabilities on the service journey.

Why This Matters for Enterprise

From a technical leadership perspective, here are key reasons this matters:

- Reliability and compliance at scale: If agents must follow strict compliance sequences (identity verification, consent collection, PHI handling), you need an architecture that enforces order, not just implies it.

- Maintainability: Modular nodes mean updates (e.g., new verification logic, new tool integration) can be made in isolation without rewriting the whole prompt.

- Scalability: As you extend from a single domain (say, health insurance) to others (banking, commercial insurance, healthcare navigation), you reuse nodes, mix and match transitions, and grow the graph incrementally.

- Technology and cost leverage: Internal data shows that orchestration can outperform monolithic prompts even with smaller, cheaper models — giving you more headroom for experimentation without sacrificing quality.

- Audit trails and explainability: With clear nodes and transitions, it’s easier to trace a conversation flow, isolate failure points, and produce logs for governance or regulatory review.

Implementation Tips for the C-Suite

Here are some practical pointers for your leadership teams as you move from pilots to production:

- Define your procedural workflow first: For every conversation type (e.g., health plan inquiry, claim submission), document the required steps, decision points, hand-offs, and compliance gates.

- Break the workflow into nodes: Map each node to one prompt + tool access + context state. Keep each node focused and light.

- Explicit transition rules: Create a transition map: Node A can move to Node B or Node Escalation, but not Node C until verification success.

- Minimize tool access per node: Each node should only see the tools it needs. Don’t give the entire system access to every tool at every stage.

- Monitor adherence: Use metrics like UJCS (or comparable) that consider both correct sequences and correct tool usage.

- Iterate and modularize: Need to update verification logic? Change Node A. Need to add a new domain? Create new nodes and transitions rather than a full-rebuild.

- Governance and logging: Because you have modular nodes, you can capture logs per node, analyze failures, and support audit/regulatory reviews more easily.

Conclusion

When it comes to AI Agents, you’re not just picking a model or sandboxing a pilot. You’re building a reliable, scalable architecture that must meet business outcomes — consistency, compliance, transparency, performance. The flat single‐prompt approach may get you started, but it won’t sustain enterprise demands.

An orchestrated model gives you structure without sacrificing flexibility. It lets you retain control over sequence, tool access, tone transitions, and compliance gates — while still leveraging the power of LLMs. If you’re looking to deploy AI agents in regulated workflows (healthcare, insurance, financial services), this shift isn’t optional — it’s essential.

When your teams hear “Answer every call” and mean it, they need more than an LLM. They need an orchestrated agent system that stays the course.

Ready to orchestrate smarter, more consistent AI agents? Get a demo.