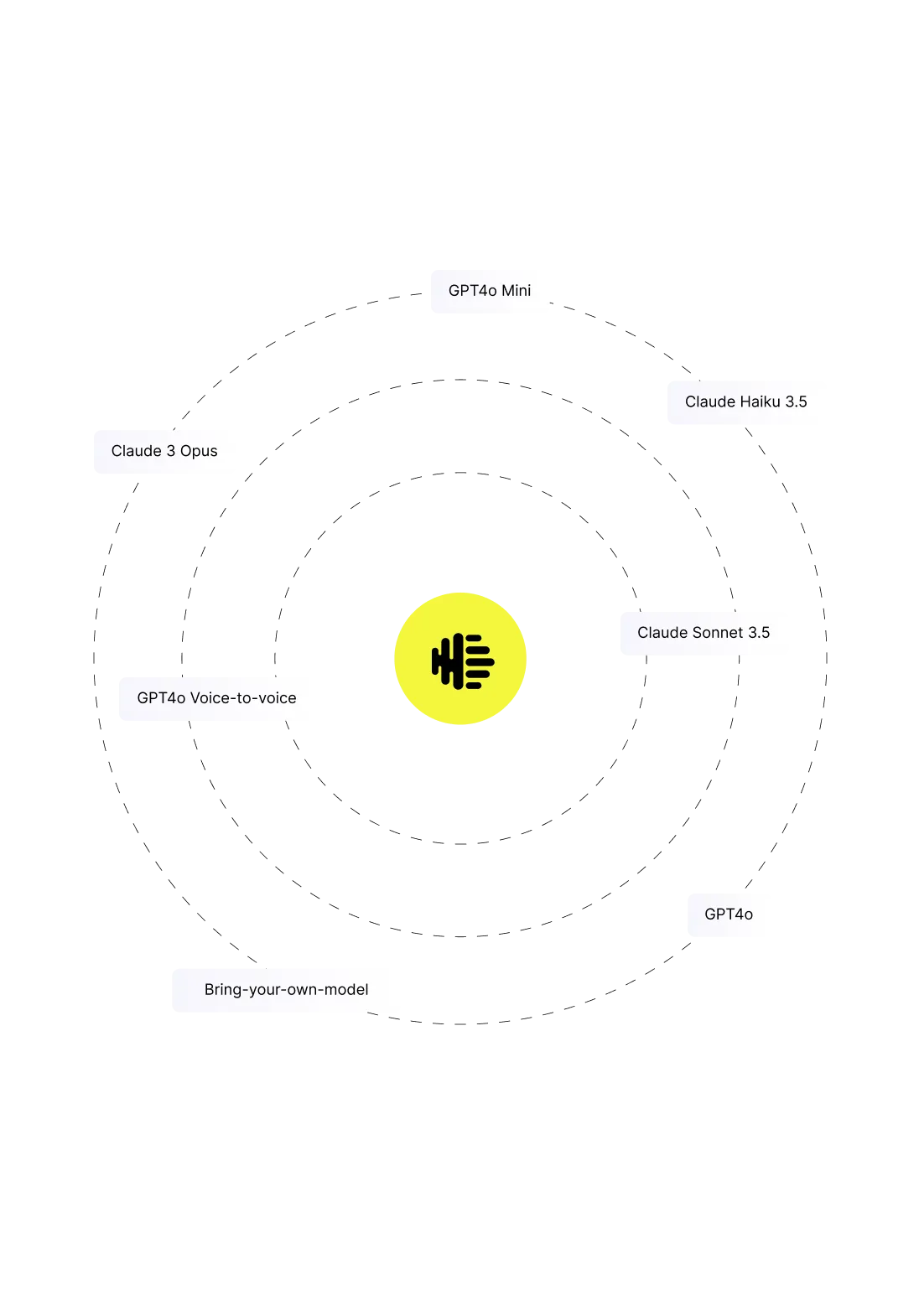

The Guardian Framework ensures that our AI Agents perform to your security, compliance, and reliability standards.

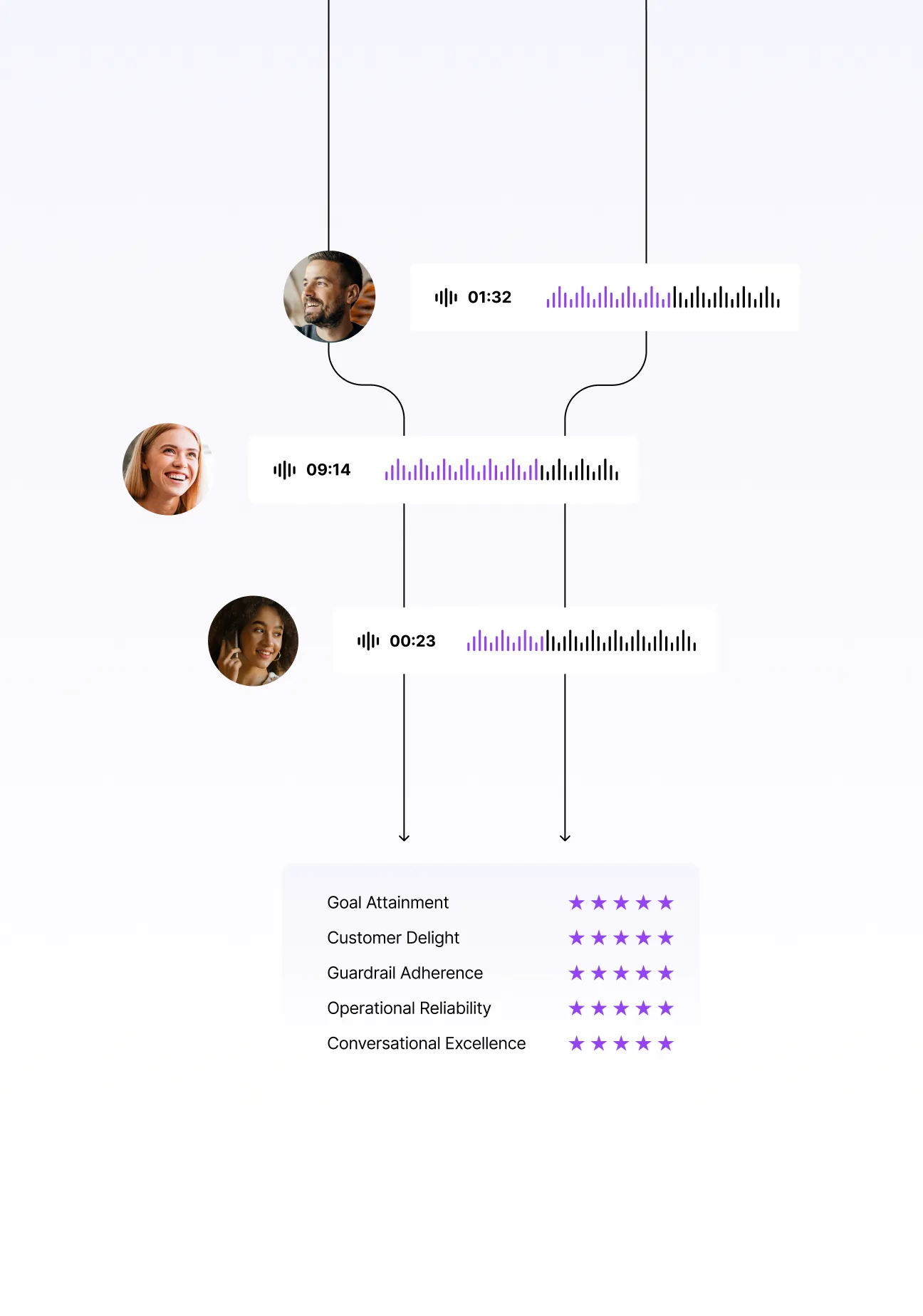

Pre- and in-deployment evaluations deliver total visibility and drive continuous learning.

Validate every new or updated prompt using real-world simulation to ensure your AI Agents perform as expected.

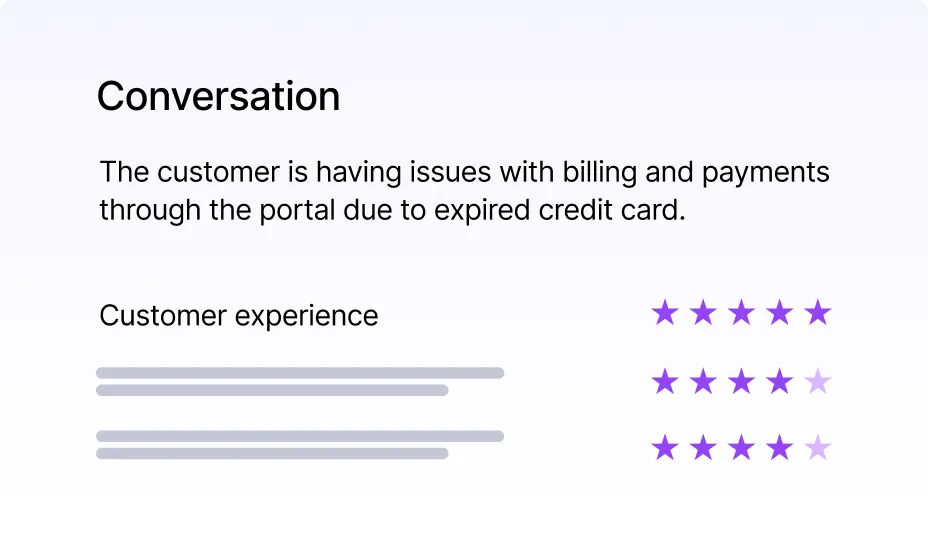

Prove compliance on every call, evaluate outcomes, and trace failures instantly with built-in monitoring and audit logs.

Audit every single interaction and easily identify exact points for failure with automated performance reporting.

At every stage of the lifecycle, AI agents are continuously evaluated for:

.svg)

Ready to take your department from a cost center to a strategic revenue division? We’re here to help.